This week, Dashbot and Mercedes-Benz Research & Development North America (MBRDNA) hosted a meetup to dig deeper into the context of voice and its impact on conversational design.

Insights were presented by

- Mihai Antonescu, Senior Software Engineer on Speech and Digital Assistant at MBRDNA

- Lisa Falkson, Senior VUI Designer for Alexa Communications at Amazon

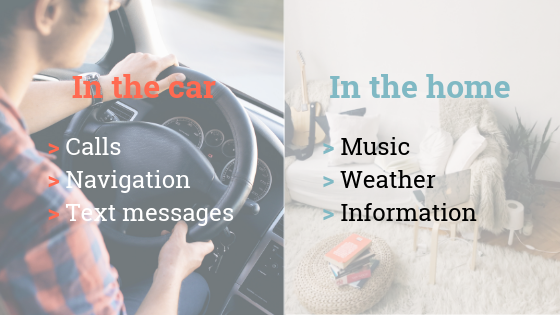

Different contexts, different use cases

While the top 3 reasons for addressing smart speakers at home are listening to music, checking the weather and asking for information, Lisa shared that drivers interact with their car voice assistants mainly to initiate phone calls, access navigation, or send a text.

Why is it so? Context dictates voice use cases, as it impacts both the framework and the content of the conversation.

The 6 dimensions of context

Lisa walked us through the various dimensions of context that have to be taken into account to understand how it impacts voice use cases.

- Environment

While car passenger compartments are physically pretty standardized, the set-up possibilities for a smart speaker at home are virtually limitless, with each home being different. - Connectivity

While at home access to the internet is generally not an issue thanks to WiFi, in-car voice assistants rely on mobile signal that can be patchy. Yet drivers will expect to be able to use voice control anywhere, even when offline. - Noise

In the car, the number of passengers (think kids in the back seat), the type of road and the speed of driving have tremendous impact on the level of noise that will interfere with voice recognition. All the same, a home can be anywhere from silent to extremely noisy, depending on the activity performed while using the smart speaker. - Distance to microphone

At home, the user can be virtually at any distance from the mic, sometimes shouting “Alexa” or “OK Google” from another room. In the car, the physical space is limited but the issue of the direction of the mic remains. It proves difficult to cater to both the driver and the passengers. - Hands free or busy

In the car, hands are on the wheel and must stay there. At home, there is a mix of both as the number of activities performed is virtually unlimited. The most common “hands busy” activity while using voice is actually cooking. - Eyes on or off the screen

At home the user can always turn around to use a screen. In the car, the rule is one and only: eyes on the road, not on a screen! The National Highway Traffic and Safety Administration (NHTSA) actually regulates the max “glanceability” of in-car interfaces: all glances away from the forward roadway have to be under 2 seconds.

As a conclusion, Lisa stressed that even though users claim they want the same voice assistants everywhere, you can’t just put Alexa or Google Home in a car and expect it to deliver a satisfying user experience. Conversations and devices have to be designed specifically for the context and the use cases they will support.

The 3 golden rules for in-car voice design

With Mihai, we had the opportunity to take a deep dive into the specifics of designing voice for cars. Mihai is involved in the development of MBUX, Mercedes-Benz User Experience, a personalized, AI-powered in-car experience that encompasses voice control, touch screens and pads and theme displays that Mercedes-Benz offers to their drivers.

Conversation-wise, the car is a very specific environment: drivers’ focus will always be first on driving. This may seem trivial, but it has a massive impact on conversation design. First and foremost, traffic events will cause unexpected pauses in the conversation flow. This contingency is easily understood by a human, but how can a voice assistant deal with random breakdowns in the conversation?

In addition, focusing on the road means drivers cannot get too involved in a learning process with their voice assistant. The conversation has to be as straightforward as possible in order not to distract the driver. The NHTSA limits task completion to 12 second max.

Accordingly, Mihai shared with us the 3 principles to apply to deliver a truly satisfying in-car voice experience.

- Use context to keep it simple

In-car voice assistants gather a lot of metadata (e.g. destination, temperature, speed) that can be used to communicate the right information to the right user at the right time. For example, the conversation will not have the same content and path when interacting with the driver while at high speed on the freeway versus with the passengers when the car is parked. - Take advantage of multimodal

The car compartment is rich in interaction modalities, from audio to display, that can complement each other. Therefore, voice design needs to think further than voice and integrate the whole wealth of the vehicle context. This opens the door to very exciting “infotainment” experiences for the future. - Offline capabilities are key

As also stressed by Lisa, cars take their driver to places where mobile signal can be nonexistent. However, drivers will still need their voice assistant to remain operational. Conversation design has to anticipate this off-grid eventuality and find ways to maintain consistency in the experience.

Hey Mercedes! Testing MBUX in the new A-Class

For the meetup, MBRDNA showcased one of their brand new Class A vehicles, featuring MBUX.

We had the exciting opportunity to try MBUX in real life. After we hopped in the car, we triggered the “Hey Mercedes!” wake up word.

After hearing from Mihai and Lisa, we could not resist but challenge the system and see how it applied the above principles to handle context. We broke the conversation in 3 pieces as if distracted by driving. First we asked for an Italian restaurant, then we ask for the weather in San Francisco. And after a pause, we told MBUX “let’s go there!”. We were delighted to hear the system start the navigation to the Italian restaurant we had previously selected.

A glimpse into the future

Both Lisa and Mihai have exciting visions on how context will increasingly be better integrated to conversations and enhance the voice experience.

Particularly in the car, they expect the following trends to impact voice design in the near future.

- Personalization

The ability for voice assistants to better know the user and remember his/her preferences will enable better search and navigation experiences by offering more relevant options. For example, if you have a loyalty program with a specific coffee chain, your voice assistant would suggest the locations of that brand on top of the list. - Additional sensors and trackers

The ability for voice assistants to be fed with facial and emotional data will allow many new voice use cases that could improve road safety – for instance interacting with the driver when signs of distractions are registered. - Autonomous vehicles

Self-driving cars will remove the need for constant focus on the road and let hands break free from the wheel. This new user behavior will create a wealth of new modalities for conversations. Lisa also foresees that this will come with a range of new challenging use cases to solve, such as reassuring the driver after the car took over for emergency braking.

In the broader scheme, Mihai reckons several voice assistants will still coexist, each with their specific strength, but will better communicate amongst each other to better cater to users’ preferences across various contexts.

In addition, Lisa stressed that multimodality is still in its infancy. At the moment, little is known about where users are looking when they interact with their voice assistant. Even with screen-enabled devices like the Echo Show, it remains unclear if users consider the screen at all. Besides, current Alexa experiences are still primarily designed only for voice and are not visually appealing. Lisa believes the future holds opportunities for truly multimodal experiences that will combine a compelling voice experience with exciting visual content that will draw the user in.

These learnings and predictions are very exciting for us at Dashbot. As voice-experiences are getting context-smarter, their optimization will become more complex and vital than ever.

Watch the full meetup

Additional resources

Check out our blog posts

- “How consumers really use Alexa and Google Home Voice Assistant”

- “Primer on the context of voice: in the car, at home & on the go”

About Dashbot

Dashbot is a conversational analytics platform that enables enterprises and developers to understand user behaviors, optimize response effectiveness, and increase user satisfaction through actionable data and tools.

In addition to traditional analytics like engagement and retention, we provide chatbot specific metrics including NLP response effectiveness, sentiment analysis, conversational analytics, and the full chat session transcripts.

We also have tools to take action on the data, like our live person take over of chat sessions and broadcast messaging for re-engagement.

We support Alexa, Google Home, Facebook Messenger, Slack, Twitter, Kik, SMS, web chat, and any other conversational interface.

/blog

/blog